The Principle of Diversity: Training Stronger Vision Transformers Calls for Reducing All Levels of Redundancy https://arxiv.org/abs/2203.06345

#cv #vit

#cv #vit

CLIP Models are Few-shot Learners: Empirical Studies on VQA and Visual Entailment https://arxiv.org/abs/2203.07190

#multimodal #zero_shot

#multimodal #zero_shot

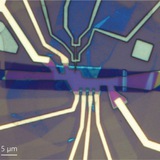

Parametric exploration of zero-energy modes in three-terminal InSb-Al nanowire devices https://arxiv.org/abs/2203.00773

#physics #majoranas #topological

#physics #majoranas #topological

Forwarded from Artificial Intelligence

Bamboo: Building Mega-Scale Vision Dataset Continually with Human-Machine Synergy

Github: https://github.com/davidzhangyuanhan/bamboo

Project: https://opengvlab.shlab.org.cn/bamboo/home

Paper: https://arxiv.org/abs/2203.07845

@ArtificialIntelligencedl

Github: https://github.com/davidzhangyuanhan/bamboo

Project: https://opengvlab.shlab.org.cn/bamboo/home

Paper: https://arxiv.org/abs/2203.07845

@ArtificialIntelligencedl

👍1

Data-Driven Offline Optimization For Architecting Hardware Accelerators https://arxiv.org/abs/2110.11346

#rl #chips

#rl #chips

Inadequately Pre-trained Models are Better Feature Extractors https://arxiv.org/abs/2203.04668

#cv #feature_extraction

#cv #feature_extraction

Respecting causality is all you need for training physics-informed neural networks https://arxiv.org/abs/2203.07404

#causality #pinn

#causality #pinn

Three things everyone should know about Vision Transformers https://arxiv.org/abs/2203.09795

#cv #vit

#cv #vit

BaGuaLu: Targeting Brain Scale Pretained Models with over 37 Million Cores

https://keg.cs.tsinghua.edu.cn/jietang/publications/PPOPP22-Ma%20et%20al.-BaGuaLu%20Targeting%20Brain%20Scale%20Pretrained%20Models%20w.pdf

#large_scale #moe

https://keg.cs.tsinghua.edu.cn/jietang/publications/PPOPP22-Ma%20et%20al.-BaGuaLu%20Targeting%20Brain%20Scale%20Pretrained%20Models%20w.pdf

#large_scale #moe

Mapping global dynamics of benchmark creation and saturation in artificial intelligence https://arxiv.org/abs/2203.04592

#benchmarks #pwc

#benchmarks #pwc

Scaling Up Your Kernels to 31x31: Revisiting Large Kernel Design in CNNs https://arxiv.org/abs/2203.06717

#cv #cnn

#cv #cnn

In- and out-of-plane field induced quantum spin-liquid states in a more ideal Kitaev material: BaCo2(AsO4)2 https://arxiv.org/abs/2106.13418

#physics #ksl

#physics #ksl

Complex paths around the sign problem https://journals.aps.org/rmp/abstract/10.1103/RevModPhys.94.015006

#physics #mc #sign_problem

#physics #mc #sign_problem

Reviews of Modern Physics

Complex paths around the sign problem

A promising path to solving QCD is on a computer by discretizing spacetime, rewriting the QCD Lagrangian to fit in that discretization, and then taking the appropriate continuum and infinite volume limits. A problem with this method is that the calculation…

Drive&Segment: Unsupervised Semantic Segmentation of Urban Scenes via Cross-modal Distillation https://arxiv.org/abs/2203.11160

#cv #self_supervised #semantic #multimodal

#cv #self_supervised #semantic #multimodal

Kramers-Wannier-like Duality Defects in (3+1)D Gauge Theories https://journals.aps.org/prl/abstract/10.1103/PhysRevLett.128.111601

#physics #topological

#physics #topological

Physical Review Letters

Kramers-Wannier-like Duality Defects in $(3+1)D$ Gauge Theories

We introduce a class of noninvertible topological defects in $(3+1)D$ gauge theories whose fusion rules are the higher-dimensional analogs of those of the Kramers-Wannier defect in the $(1+1)D$ critical Ising model. As in the lower-dimensional case, the presence…

Characterizing and Improving the Robustness of Self-Supervised Learning through Background Augmentations https://arxiv.org/abs/2103.12719

#cv #self_supervised

#cv #self_supervised