Refer-Agent: A Collaborative Multi-Agent System with Reasoning and Reflection for Referring Video Object Segmentation https://arxiv.org/abs/2602.03595

arXiv.org

Refer-Agent: A Collaborative Multi-Agent System with Reasoning and...

Referring Video Object Segmentation (RVOS) aims to segment objects in videos based on textual queries. Current methods mainly rely on large-scale supervised fine-tuning (SFT) of Multi-modal Large...

Learning to Repair Lean Proofs from Compiler Feedback https://arxiv.org/abs/2602.02990

arXiv.org

Learning to Repair Lean Proofs from Compiler Feedback

As neural theorem provers become increasingly agentic, the ability to interpret and act on compiler feedback is critical. However, existing Lean datasets consist almost exclusively of correct...

❤1

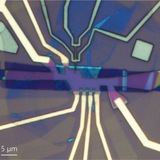

Universal Topological Gates from Braiding and Fusing Anyons on Quantum Hardware https://arxiv.org/abs/2601.20956

arXiv.org

Universal Topological Gates from Braiding and Fusing Anyons on...

Topological quantum computation encodes quantum information in the internal fusion space of non-Abelian anyonic quasiparticles, whose braiding implements logical gates. This goes beyond Abelian...

Expanding the Capabilities of Reinforcement Learning via Text Feedback https://arxiv.org/abs/2602.02482

arXiv.org

Expanding the Capabilities of Reinforcement Learning via Text Feedback

The success of RL for LLM post-training stems from an unreasonably uninformative source: a single bit of information per rollout as binary reward or preference label. At the other extreme,...

Goal-Guided Efficient Exploration via Large Language Model in Reinforcement Learning https://arxiv.org/abs/2509.22008

arXiv.org

Goal-Guided Efficient Exploration via Large Language Model in...

Real-world decision-making tasks typically occur in complex and open environments, posing significant challenges to reinforcement learning (RL) agents' exploration efficiency and long-horizon...

Self-dual Higgs transitions: Toric code and beyond https://arxiv.org/abs/2601.20945

arXiv.org

Self-dual Higgs transitions: Toric code and beyond

The toric code, when deformed in a way that preserves the self-duality $\mathbb{Z}_2$ symmetry exchanging the electric and magnetic excitations, admits a transition to a topologically trivial...

🤯2👾1

We hid backdoors in binaries — Opus 4.6 found 49% of them https://quesma.com/blog/introducing-binaryaudit/

Quesma

We hid backdoors in binaries — Opus 4.6 found 49% of them - Quesma Blog

BinaryAudit benchmarks AI agents using Ghidra to find backdoors in compiled binaries of real open-source servers, proxies, and network infrastructure.

👍6

An X-ray-emitting protocluster at z ≈ 5.7 reveals rapid structure growth https://www.nature.com/articles/s41586-025-09973-1

Nature

An X-ray-emitting protocluster at z ≈ 5.7 reveals rapid structure growth

Nature - Discovery of a protocluster at z = 5.68, merely one billion years after the Big Bang, suggests that large-scale structure must have formed more rapidly in some regions of the...

Soft Contamination Means Benchmarks Test Shallow Generalization https://arxiv.org/abs/2602.12413

arXiv.org

Soft Contamination Means Benchmarks Test Shallow Generalization

If LLM training data is polluted with benchmark test data, then benchmark performance gives biased estimates of out-of-distribution (OOD) generalization. Typical decontamination filters use n-gram...

Data Repetition Beats Data Scaling in Long-CoT Supervised Fine-Tuning https://arxiv.org/abs/2602.11149

arXiv.org

Data Repetition Beats Data Scaling in Long-CoT Supervised Fine-Tuning

Supervised fine-tuning (SFT) on chain-of-thought data is an essential post-training step for reasoning language models. Standard machine learning intuition suggests that training with more unique...

rePIRL: Learn PRM with Inverse RL for LLM Reasoning https://arxiv.org/abs/2602.07832

arXiv.org

rePIRL: Learn PRM with Inverse RL for LLM Reasoning

Process rewards have been widely used in deep reinforcement learning to improve training efficiency, reduce variance, and prevent reward hacking. In LLM reasoning, existing works also explore...

❤3

Tensor Decomposition for Non-Clifford Gate Minimization https://arxiv.org/abs/2602.15285

arXiv.org

Tensor Decomposition for Non-Clifford Gate Minimization

Fault-tolerant quantum computation requires minimizing non-Clifford gates, whose implementation via magic state distillation dominates the resource costs. While $T$-count minimization is...

👍3😈1

BRIDGE: Predicting Human Task Completion Time From Model Performance https://arxiv.org/abs/2602.07267

arXiv.org

BRIDGE: Predicting Human Task Completion Time From Model Performance

Evaluating the real-world capabilities of AI systems requires grounding benchmark performance in human-interpretable measures of task difficulty. Existing approaches that rely on direct human task...

😁1

Forwarded from Love. Death. Transformers.

Если вы готовитесь к собесу в норм место вам будет полезно почитать

https://djdumpling.github.io/2026/01/31/frontier_training.html

https://djdumpling.github.io/2026/01/31/frontier_training.html

Alex Wa’s Blog

frontier model training methodologies

How do labs train a frontier, multi-billion parameter model? We look towards seven open-weight frontier models: Hugging Face’s SmolLM3, Prime Intellect’s Intellect 3, Nous Research’s Hermes 4, OpenAI’s gpt-oss-120b, Moonshot’s Kimi K2, DeepSeek’s DeepSeek…

👍3🔥3