Forwarded from Spark in me (Alexander)

Pre-trained BERT in PyTorch

https://github.com/huggingface/pytorch-pretrained-BERT

(1)

Model code here is just awesome.

Integrated DataParallel / DDP wrappers / FP16 wrappers also are awesome.

FP16 precision training from APEX just works (no idea about convergence though yet).

(2)

As for model weights - I cannot really tell, there is no dedicated Russian model.

The only problem I am facing now - using large embeddings bags batch size is literally

And training models with sentence piece is kind of feasible for rich languages, but you will always worry about generalization.

(3)

Did not try the generative pre-training (and sentence prediction pre-training), I hope that properly initializing embeddings will also work for a closed domain with a smaller model (they pre-train 4 days on 4+ TPUs, lol).

(5)

Why even tackle such models?

Chat / dialogue / machine comprehension models are complex / require one-off feature engineering.

Being able to tune something like BERT on publicly available benchmarks and then on your domain can provide a good way to embed complex situations (like questions in dialogues).

#nlp

#deep_learning

https://github.com/huggingface/pytorch-pretrained-BERT

(1)

Model code here is just awesome.

Integrated DataParallel / DDP wrappers / FP16 wrappers also are awesome.

FP16 precision training from APEX just works (no idea about convergence though yet).

(2)

As for model weights - I cannot really tell, there is no dedicated Russian model.

The only problem I am facing now - using large embeddings bags batch size is literally

1-4 even for smaller models.And training models with sentence piece is kind of feasible for rich languages, but you will always worry about generalization.

(3)

Did not try the generative pre-training (and sentence prediction pre-training), I hope that properly initializing embeddings will also work for a closed domain with a smaller model (they pre-train 4 days on 4+ TPUs, lol).

(5)

Why even tackle such models?

Chat / dialogue / machine comprehension models are complex / require one-off feature engineering.

Being able to tune something like BERT on publicly available benchmarks and then on your domain can provide a good way to embed complex situations (like questions in dialogues).

#nlp

#deep_learning

GitHub

GitHub - huggingface/transformers: 🤗 Transformers: the model-definition framework for state-of-the-art machine learning models…

🤗 Transformers: the model-definition framework for state-of-the-art machine learning models in text, vision, audio, and multimodal models, for both inference and training. - GitHub - huggingface/t...

Forwarded from Hacker News

AlphaStar: Mastering the Real-Time Strategy Game StarCraft II (Score: 112+ in 1 hour)

Link: https://readhacker.news/s/3Wamk

Comments: https://readhacker.news/c/3Wamk

Link: https://readhacker.news/s/3Wamk

Comments: https://readhacker.news/c/3Wamk

https://www.reddit.com/r/MachineLearning/comments/ajgzoc/we_are_oriol_vinyals_and_david_silver_from/

reddit

We are Oriol Vinyals and David Silver from DeepMind’s AlphaStar...

Hi there! We are Oriol Vinyals (/u/OriolVinyals) and David Silver (/u/David_Silver), lead researchers on DeepMind’s AlphaStar team, joined by...

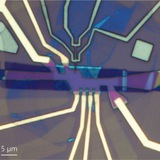

Physics in Two Dimensions https://courses.physics.illinois.edu/phys598PTD/fa2013/